From 60 Frames per Second to 500 in Haskell

Haskell is often advertised as fast, easy to parallelize and to optimize. But how much of that is really true? We are going to demonstrate it using a game we are building, including how many changes we had to introduce to increase the game speed by 700% on desktop, how we managed to go from increasing memory consumption in the order of hundreds of megabytes down to constant memory consumption of only 3MB. We’ll also see the impact it had on Android.

The sales pitch

We’ve all heard (or used) the following arguments about Haskell and FP:

-

Because haskell is pure, functions called with the same arguments always return the same result (aka. referential transparency). With no side effects, you can cache the results and run the same thing twice on different cores, if the value is needed in two places. It will always be the same value.

-

One cannot change a pure value, only create new values based on “old” ones. This means it’s ok to share data between threads, since values cannot change anyway.

-

For the same reason, parallelizing is easy: using the parallel evaluation function par, or splitting data in smaller chunks with rpar, can dramatically speed up the execution.

-

Because haskell is lazy, expressions do not get evaluated unless the values are actually required. When they do, they are only evaluated down to the required level.

This all sounds great but, how much of this is true and how much does it matter in practice? A few weeks ago we showed a video of the game running on Android and on desktop. It was giving around 10-15 FPS on Android and 60-70 FPS on desktop. It was not enough, not even close to what we wanted. If the sales pitch was true, it should be trivial to solve.

The steps we will show below were all very similar: first, we try to understand how time/memory is being spent, by using a very simple compilation flag and/or extra tool. Next, we address one problem at a time, often requiring to change less than 10 lines of code each.

So, the first thing to do was to understand how time was being spent.

Profiling

Because profiling the Android code itself is harder (with ndk tools) and Haskell tools are very good for profiling, the desktop was used to optimize both versions. Remember: except for the main module (which does not exist on Android), the code is the same.

Following RealWorldHaskell and the Haskell wiki, we started getting profiling information. That’s incredibly easy: all we had to do is recompile the dependencies with profiling enabled (–enable-library-profiling), and enable profiling when calling cabal for keera-breakout.

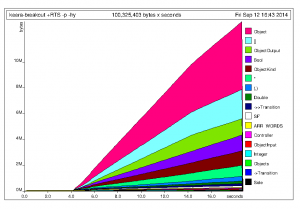

Running the program with profiling information revealed that it was consuming hundreds of megabytes, with only 70% of the time doing productive work. It also said that most of the time was being spent on SDL (more than 75%):

keera-breakout +RTS -p -hy -RTS

total time = 18.63 secs (18629 ticks @ 1000 us, 1 processor)

total alloc = 428,186,280 bytes (excludes profiling overheads)

COST CENTRE MODULE %time %alloc

createTextureFromSurface.\.\ Graphics.UI.SDL.Render 78.7 0.3

renderCopy.\.\.\.\ Graphics.UI.SDL.Render 6.3 0.4

renderPresent Graphics.UI.SDL.Render 3.1 0.0

fdComp FRP.Yampa 1.0 11.7

printAlignLeft Display 0.4 1.7

renderCopy.\.\.\ Graphics.UI.SDL.Render 0.4 2.3

fmap Data.IdentityList 0.4 8.0

mkFinalizedTexture Graphics.UI.SDL.Raw 0.3 4.0

paintObject Display 0.3 4.8

displayObjects Display 0.2 1.5

fpAux.tf FRP.Yampa 0.2 6.7

cpXX.tf FRP.Yampa 0.2 4.7

paintObject.(...) Display 0.2 3.1

...

Optimising SDL calls

The first thing to do was to avoid unnecessary SDL operations. Some of the code was a conversion from SDL 1.2 to SDL2. In SDL2, textures should be used in place of surfaces, but the code was converting the old data structures at every cycle. Manually adding textures to the resource manager in place of the old-typed values was enough. Increase: 10FPS-20FPS (on desktop).

Still, most of the time was still being spent on SDL. It was time for more drastic changes. C does not annotate pure functions as such, but the semantics of SDL make some data conversions referentially transparent (remember: same args, same result). In Haskell, impure functions (with side effects) have a very specific type signature, which prevents certain optimisations that could break things (the machinery is very simple and reusable, you can find more about IO here and here). So, the next was to find SDL functions that would always return the same result, and tell the Haskell compiler that it was OK to cache those results. In particular, because text barely changes from one frame to another, all text-rendering calls could be cached. We did this with unsafePerformIO (this is one of the very few cases in which its use is justified, and the only case in years in which we have needed to use it). Another 15FPS gained there.

Memory consumption

It still felt that the game should run much faster, it’s not a very complex one. It was time to understand what was taking the memory. For that, the runtime system flags -hc and -hy were used (the former shows memory consumption per operation, the latter per type; check out this chapter to learn more). It revealed an interesting profile:

Memory allocation profile broken down by type

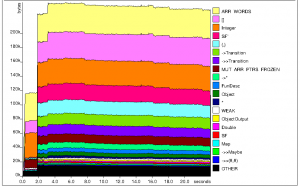

So, objects were being accumulated in ever-increasing amounts, and taking most of the memory. The game produces new objects based on old objects. New objects are evaluted (painted) at every iteration, so there was no apparent reason why old ones shouldn’t be garbage collected. The problem was that some object fields weren’t always being used, so old objects were being kept around for that reason. The solution was simple: adding bangs!

Bangs are strictness annotations that tell the compiler to force evaluation of a value constructor argument. Where? In the Object definition (you can see a similar one here, which suffers from the same symptom, and try it yourself). That was all. The game was now running smoother than ever, going from 90 to over 300 FPS. At this point we were hitting 18 FPS average on Android (with a normal variable range of 11-27), which is quite close to the 25 FPS that’s necessary to make animations smooth.

Keera Breakout memory allocation profile by type after adding (strictness) bangs.

Adding parallelism

Parallelism is often advertised as easy to introduce in Haskell. In fact, the compiler does it for you automatically, using several threads during execution when the right compilation flags are enabled. This game uses Yampa and the first attempt was to try to introduce the changes there. However, Yampa’s collection-handling combinators do not use collections, traversables or lists, but Functors, and the Functor type class does not let us split something in two.

Instead, it was time to see if parallelism could be introduced in the game itself. The way to do so was also quite obvious: we should evaluate the physics and collisions by splitting the block list in two (a very adhoc form of space partitioning). The code was literally what Simon Marlow wrote in chapter two of his Parallel and Concurrent Programming in Haskell book (with an alpha-renaming). 5 lines of code, plus the import, plus one line in the package (cabal) file specifying a dependency on the Parallel library. That was all. Speed increase: between 75 and 100 FPS. Now the game regularly hits 500 FPS. At these speeds, the physics works fantastically well on desktop, and objects never ever (visibly) overlap or pass through one another.

Adding concurrency on Android

Still, the game on Android was running at less than 25 FPS and, with less than ~30FPS, the physics looks funny because objects can easily pass through walls (or at least, overlap with them quite clearly for a few milliseconds). Above 15 FPS, the UI runs smoothly, so it was time to separate the disassociate the physics code from the renderer.

What was required for this? Just two thread-safe mutable variables (MVars) and two threads. Upon initialisation, the renderer was receiving and keeping an MVar from which to read the game state and spawning a new thread. That required 10 lines of code to be changed in the renderer. The game module was now, at every game loop iteration, overwriting the old value of the MVar. Change to the game module: 5 lines of code. The result on Android, the renderer kept working at 15 FPS, but now the physics was going at 30FPS, which was already very smooth. Judge by yourself.

So, to make a long story short: 5 changes that involved profiling, simplifying and parallelising the code. Result: a speed increase of x7 on desktop that makes the game run amazingly, and a reasonably fast and stable execution on Android.

What’s next?

We hope to have convinced you with this and previous articles that programming in Haskell is not only easier and elegant, but fast, portable and really worth trying. If you were considering to write a game, give Haskell a chance! :)

The game is getting close to running perfectly on Android. With only minor optimisations now it will run fine on even slower devices and will be ready for the market. So far we’ve only needed to use basic optimisations. Imagine how far we could get with more advanced techniques.

What we are showing is only a pre-demo, but we have a whole game design with custom assets, levels, music and powerups and more. All of them are drafted or ready to be incorporated in the game. Testing will real users will come next, refining it until players are happy.

The desktop version has sound, but it is (manually) disabled on Android. It will be enabled as well.

Since this is much more demanding than our GALE Studio currently requires, all the improvements provided by this game are making it into the new version of engine and IDE (the first to be publicly released) with Windows, Linux and Android support.

How to participate

We would like to encourage you to take part in this exciting revolution that the Haskell community is experiencing. Become an active member, write Haskell code. Create your own programs and games, and learn about the beauty in the language.

A simplified version of a breakout game was released quite recently on github. You can hack around with it as much as you want. If you do something interesting with it, we’d love to know.

We publish regular updates on our Facebook page and Twitter account. We would be very grateful if you could help by following us on social networks. This helps you and your friends be aware of our progress and hopefully bring more people into the Haskell community.

Some people are being very generous and giving us micro-donations (so far, via Flattr; there’s a button right below). Donations will be used exclusively to make real Haskell games for multiple platforms, including Android. If you can donate something, by any method, it will be very appreciated.